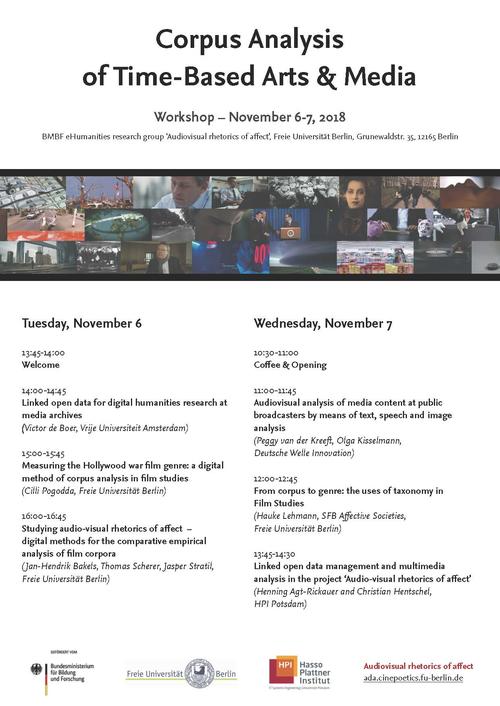

Corpus Analysis of Time-Based Arts & Media

06./07.11.2018 | Workshop by the BMBF eHumanities research group 'Audiovisual rhetorics of affect'.

After introducing the members of the research group, a collaboration of the Freie Universität Berlin and the Hasso Plattner Institute in Potsdam, as well as the participants of the workshop, Jan-Hendrik Bakels formulated the core interests of this event: how can the humanities make use of achievements in the field of digital corpus analysis and machine learning without having to adjust the focus of their research?

In order to tackle this question, Victor de Boer (Vrije Universiteit Amsterdam) gave insight into his research on audiovisual archives, especially the renowned Netherlands Institute for Sound and Vision. His talk "Linked Open Data for Digital Humanities Research at Media Archives" concentrated on three keywords for digital archives: smart, connected, and open. The first of which, 'smart', is accomplished through metadata annotation and corpora building. While there is also machine learning involved, for example in the extraction of information from teletext subtitles for TV shows, the Institute heavily relies on social tagging. By complementing professional tagging by single persons with so-called crowd-sourcing by creating a reward system for niche audiences that tag audiovisual material in online databases, a broad variety of tags are introduced to smart, ever-growing corpora.

While the third keyword, 'open', is very important for the museal and pedagogic approach of the institute, de Boer focused more on the 'connected'-part of this trifold concept. In the digital age, it became far more important for archives to move away from being mere silos of information. In order to cooperate with different organizations, audiovisual archives work on making their collections standardized and machine-readable. Thus, information can be reused by other archives while the own data is enriched by the connection with these archives. As an example, de Boer mentioned the collaborative database Wikidata that has gathered over 500 million 'fact statements' to support Wikipedia articles. These statements can be combined and reused by humans as well as machines while being connected with all sorts of information data online.

Afterwards, Jan-Hendrik Bakels gave a presentation on the epistemological framework of the project 'Audiovisual Rhetorics of Affect.' At the core of the group’s research lies the question how audiovisual images shape embodied attitudes towards certain topics. The most important goal is then to develop computational methods for the study of audiovisual rhetorics. However, current content analysis lacks acknowledgement of specific medialities as well as the emotional framework of the recipient. Audiovisual media usually not rely on what is communicated but on how these emotional framings are shaped.

In this regard, top-down taxonomies of media annotation only constitute a first step in analyzing the emotional composition of these phenomena. To be able to grasp the rhetorics of affect, one needs to annotate aesthetic modalities like rhythm and movement. In combining digital methodologies of annotation with aesthetic modalities and concepts from film theory, a broader corpus is created that might cater for the scholarly needs of the humanities. In practice, this research prompted an adjustment of the annotation software Advene, which consists of eight annotation levels (e.g. camera), 80 annotation types (e.g. camera angle), and hundreds of different annotation values (e.g. bird's-eye view).

Thomas Scherer and Jasper Stratil gave the third presentation of Day 1 of the workshop. As members of the research group, they gave an insight into their profound analysis of the film The Company Men (USA 2010) by John Wells. Their work on a sample sequence showed an affective parkour from joyful anticipation to shock to sadness. This movement heavily relies on the music, or rather the soundwave (in terms of annotation). While a 'swelling' quality is noticeable in the happy mood music, the image-intrinsic movement intensifies before suddenly coming to a halt.

Such patterns can be found all over the annotational representation of the film in Advene. Thus, the different movements are to be read like sheet music, as has been tried by scholars (Cinemetrics) and early on by filmmakers like Sergei Eisenstein and Dziga Vertov. One can look for recurring patterns on the macro level of the film. The goal is then to create a typology of figures of audiovisual rhetorics.

The second day began with a presentation by Peggy van der Kreeft und Olga Kisselmann from "Deutsche Welle Innovation." In their talk entitled "Audiovisual Analysis of Media Content at Public Broadcasters by Means of Text of Text, Speech and Image Analysis" they presented current approaches and projects pursued by Deutsche Welle, the German international broadcaster. Applying automatized techniques for content analysis, utilization and editing is highly relevant for DW, as their programs are broadcasted in 30 different languages. These conditions require several editorial departments to work closer together and call for the development of automatized translations (of spoken word and written text), computer programs for optimized searchability, concept extraction, shot/scene and visual similarity detection, as well as for trend analysis regarding DW’s own web presence. For these purposes a mixture proprietary and open programs / analytical tools is being used: AXES OW 2, SUMMA, news.bridge, and google cloud. Within this framework, video retrieval and detection are very practice-oriented in that they are being adapted to journalistic needs. More information on current projects can be found at blogs.dw.com/innovation.

After the break, the workshop continued with a project presentation by Hauke Lehmann. In his talk, "From Corpus to Genre," he introduced the participants to the research work of the project "Migrant Melodramas and Culture Clash Comedies" (CRC 1171 "Affective Societies"), which has been investigating the formation of a genre, using the example of "Turkish-German Cinema." The project’s basic question was: How do the films subsumed under this label relate to processes of community building, and what function do they fulfill in this process? A comparative analysis of the corpus of films was then carried out in order to clarify whether commonalities could be worked out at the level of poetic concepts. According to Lehmann, defining "Turkish-German Cinema" in terms of ethnicity or certain topics presents problems that largely stem from the paradigm of identity politics. What most approaches to this subject fail to do is ask how the films actually address their audiences' everyday experiences, that is, how they affectively engage their spectators. They do not clarify the terms according to which audiovisual images can intervene into and change political discourse. To avoid these shortcomings, one would have to account for the encounter between screen and audience and the involvement in image circulation. That is, in a theoretical and methodological sense, a sense of commonality (to which the films relate their viewers as media of embodiment) and specific pathos scenes make up the generic engine for the films in question.

Lehmann's talk was followed by a presentation by Henning Agt-Rickauer and Christian Hentschel (Hasso Plattner Institute): "Linked Open Data Management and Multimedia Analysis in the Project 'Audio-Visual Rhetorics of Affect.'" From the perspective of computational sciences, Agt-Rickauer and Hentschel elaborated on the BMBF project's basic principles of data management. These comprise ways of managing the extensive film corpus, but also the development and publication of an ontology and corresponding annotations in the form of linked open data (http://ada.filmontology.org). Both speakers also offered insights on tools for searching and visualizing linked open data (Annotation Inspectator / Annotation Query) and presented first results of a (semi-)automatization of shot, color and voice detection. Last but not least, they talked about prospective ways of digitally publishing the final project results—with a strong focus on the interlocking of text and audiovisual material. One perspective would consist in the adjustment of a specific tool (Frametrail) to the project’s needs: https://frametrail.org.